This is not investment advice. The author has no position in any of the stocks mentioned. Wccftech.com has a disclosure and ethics policy.

Perhaps investors have started paying heed to the recent advice from ARK Invest’s Cathie Wood to seek shelter in greener AI pastures now that NVIDIA has joined the trillion-dollar market capitalization club. While AMD’s share price is down today, the stock’s call skew is anything but.

Time for an AI breather?

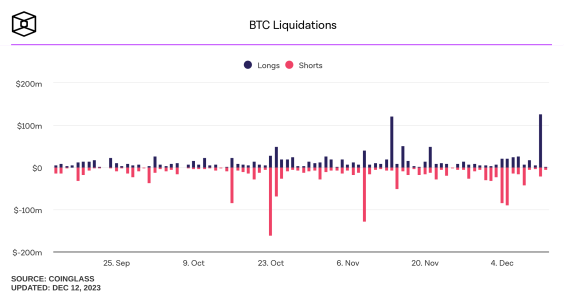

AMD call skew today (white) vs peak mania & ATH's in Nov '21 (red).

The skew jumped from Friday AM (green) as the stock added 3.8% to be up nearly 50% for the month of May.

Elevated call skews often indicate that traders are anticipating higher upward… pic.twitter.com/EsdjJ1hXmv

— SpotGamma (@spotgamma) May 30, 2023

As explained by Spot Gamma in the tweet above, AMD’s call skew in early trading today eclipsed the levels last recorded during the November 2021 peak equity mania phase. In fact, the call skew is now up around 50 percent for the month of May.

As a refresher, the skew refers to the disparity in implied volatility (IV) pertaining to options contracts that have the same expiration but different strike prices. As a general rule, remember that the higher the demand for a particular options contract, the higher its implied volatility.

Of course, this dramatic jump in AMD’s call IV skew has fundamental underpinnings. The company is the only viable competitor of NVIDIA when it comes to GPUs, which are the preferred choice to train Large Language Models (LLMs) on the pattern of ChatGPT. Moreover, AMD recently inked a comprehensive partnership with Microsoft whereby the latter would assist the chipmaker in designing AI-focused processors. The partnership also extends to Microsoft’s bespoke processor – bearing the code-name Athena – for AI workloads.

Nonetheless, NVIDIA has a massive head start over AMD in the AI sphere. In fact, as per a tabulation by JP Morgan, NVIDIA is expected to scoop up as much as 60 percent of AI-related spending this year. NVIDIA is so confident of the prowess of its GPUs in training LLM models that it recently presented a TCO analysis at Computex 2023, whereby the cost of training an LLM model could be slashed from $10 million to just $400,000 by using the chipmaker’s GPU clusters.

There is, of course, a silver lining for AMD. The future of AI-related computing lies in inference and fine-tuning, which are software-based operations. Eventually, inference workloads will become homogenous enough to o allow for the commoditization of the underlying software, thereby chipping away at NVIDIA’s advantage and allowing AMD to play catch-up.