This is not investment advice. The author has no position in any of the stocks mentioned. Wccftech.com has a disclosure and ethics policy.

As xAI was preparing to unveil its first Large Language Model (LLM) called Grok, Elon Musk boldly declared that the generative AI model "in some important respects" was the "best that currently exists." Now, we finally have the data to prove this claim.

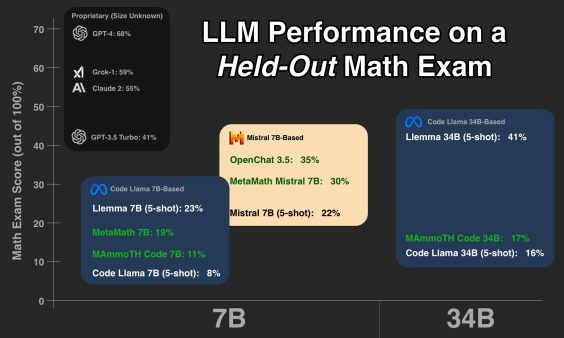

Kieran Paster, a researcher at the University of Toronto, recently put a number of AI models through their proverbial paces by testing them on a held-out math exam. Bear in mind that held-out questions, in data analytics parlance, are ones that are not part of the dataset that is used to train an AI model. Hence, a given LLM has to leverage its prior training and problem-solving skills to respond to such stimuli. Paster then hand-graded the responses of each model.

Grok AI's Performance on Held-Out Math Exam

As is evident from the above snippet, Grok outperformed every other LLM, including Anthropic's Claude 2, with the exception of OpenAI's GPT-4, earning a total score of 59 percent vs. 68 percent for GPT-4.

Grok AI's Performance on GSM8k vs. the Held-Out Math Exam

Next, Paster leveraged xAI's testing of various LLMs on GSM8k, a dataset of math word problems that is geared toward middle school, to plot the performance of these LLMs on the held-out math exam against their performance on the GSM8k.

Interestingly, while OpenAI's ChatGPT-3.5 gets a higher score than Grok on the GSM8k, it manages to secure only half of Grok's score on the held-out math exam. Paster uses this outcome to justify his conclusion that ChatGPT-3.5's outperformance on the GSM8k is simply a result of overfitting, which occurs when an LLM gives accurate results for the input data that is used in its training but not for new data. For instance, an AI model trained to identify pictures that contain dogs and trained on a dataset of pictures showing dogs in a park setting might use grass as an identifying feature to give the sought-after correct answer.

If we exclude all models that likely suffer from overfitting, Grok ranks an impressive third on the GSM8k, behind only Claude 2 and GPT-4. This suggests that Grok's inference capabilities are quite strong.

Of course, a crucial limitation in comparing these models is the lack of information on the number of training parameters that were used to train GPT-4, Claude 2, and Grok. These parameters are the configurations and conditions that collectively govern the learning process of an LLM. As a general rule, the greater the number of parameters, the more complex is an AI model.

As another distinction, Grok apparently has an unmatched innate "feel" for news. As per the early impressions of the LLM's beta testers, xAI's Grok can distinguish between various biases that might tinge a breaking story. This is likely a direct result of Grok's training on the data sourced from X.